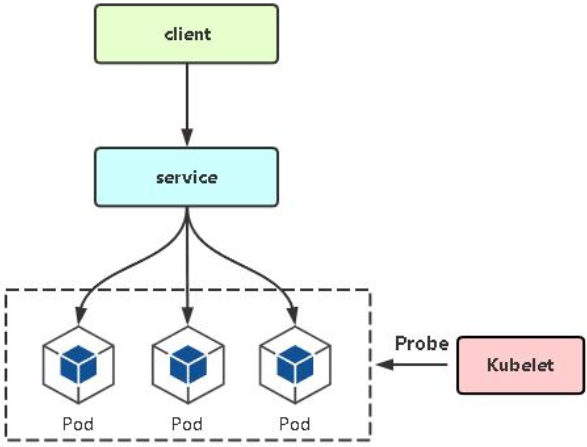

一、探针简介

探针是由 kubelet 对容器执行的定期诊断, 以保证Pod的状态始终处于运行状态, 要执行诊断, kubelet 调用由容器实现的Handler(处理程序), 也成为Hook(钩子), 有三种类型的处理程序:

ExecAction:

- #在容器内执行指定命令, 如果命令退出时返回码为0则认为诊断成功。

TCPSocketAction:

- #对指定端口上的容器的IP地址进行TCP检查, 如果端口打开, 则诊断被认为是成功的。

HTTPGetAction:

- #对指定的端口和路径上的容器的IP地址执行HTTPGet请求, 如果响应的状态码大于等于200且小于 400, 则诊断被认为是成功的。

每次探测都将获得以下三种结果之一:

- 成功: 容器通过了诊断。

- 失败: 容器未通过诊断。

- 未知: 诊断失败, 因此不会采取任何行动。

Pod重启策略

Pod一旦配置探针, 在检测失败时候, 会基于restartPolicy对Pod进行下一步操作:

restartPolicy (容器重启策略):

- Always: 当容器异常时, k8s自动重启该容器,

- ReplicationController/Replicaset/Deployment, 默认为Always。

- OnFailure: 当容器失败时(容器停止运行且退出码不为0), k8s自动重启该容器。

- Never: 不论容器运行状态如何都不会重启该容器,Job或CronJob。

imagePullPolicy (镜像拉取策略):

- IfNotPresent #node节点没有此镜像就去指定的镜像仓库拉取, node有就使用node本地镜像。

- Always #每次重建pod都会重新拉取镜像

- Never #从不到镜像中心拉取镜像, 只使用本地镜像

探针类型

Kubernetes对Pod的健康状态可以通过三类探针检查:LivenessProbe、ReadinessProbe及StartupProbe,其中最主要的探针为LivenessProbe与ReadinessProbe,kubelet会定期执行这两类探针来诊断容器的健康状况。

startupProbe: #启动探针,kubernetes v1.16引入

- 判断容器内的应用程序是否已启动完成, 如果配置了启动探测, 则会先禁用所有其它的探测, 直到startupProbe检测成功为止, 如果startupProbe探测失败, 则kubelet将杀死容器, 容器将按照重启策略进行下一步操作, 如果容器没有提供启动探测, 则默认状态为成功

livenessProbe: #存活探针

- 检测容器容器是否正在运行, 如果存活探测失败, 则kubelet会杀死容器, 并且容器将受到其重启策略的影响,如果容器不提供存活探针, 则默认状态为 Success, livenessProbe用于控制是否重启pod。

readinessProbe: #就绪探针

- 如果就绪探测失败, 端点控制器将从与Pod匹配的所有Service的端点中删除该Pod的IP地址, 初始延迟之前的就绪状态默认为Failure(失败), 如果容器不提供就绪探针, 则默认状态为 Success, readinessProbe用于控制pod是否添加至service。

探针通用配置参数:

探针有很多配置字段, 可以使用这些字段精确的控制存活和就绪检测的行为:

- https://kubernetes.io/zh/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/

initialDelaySeconds: 120

- #初始化延迟时间, 告诉kubelet在执行第一次探测前应该等待多少秒, 默认是0秒, 最小值是0

periodSeconds: 60

- #探测周期间隔时间, 指定了kubelet应该每多少秒秒执行一次存活探测, 默认是 10 秒。 最小值是 1

timeoutSeconds: 5

- #单次探测超时时间, 探测的超时后等待多少秒, 默认值是1秒, 最小值是1。

successThreshold: 1

- #从失败转为成功的重试次数, 探测器在失败后, 被视为成功的最小连续成功数, 默认值是1, 存活探测的这个值必须是1, 最小值是 1。

failureThreshold: 3

- #从成功转为失败的重试次数, 当Pod启动了并且探测到失败, Kubernetes的重试次数, 存活探测情况下的放弃就意味着重新启动容器,就绪探测情况下的放弃Pod 会被打上未就绪的标签, 默认值是3, 最小值是1。

探针http配置参数:

HTTP 探测器可以在 httpGet 上配置额外的字段:

host:

- #连接使用的主机名, 默认是Pod的 IP, 也可以在HTTP头中设置 “ Host” 来代替。

scheme: http

- #用于设置连接主机的方式(HTTP 还是 HTTPS) , 默认是 HTTP。

path: /monitor/index.html

- #访问 HTTP 服务的路径。

httpHeaders:

- #请求中自定义的 HTTP 头,HTTP 头字段允许重复。

port: 80

- #访问容器的端口号或者端口名, 如果数字必须在 1 ~ 65535 之间。

二、使用探针对pod监控状态案例

在http探测中readinessProbe探针与livenessProbe探针区别

2.1、启用livenessProbe 存活探针

root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# vi 1-http-Probe.yaml root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# cat 1-http-Probe.yaml apiVersion: apps/v1 kind: Deployment metadata: name: myserver-myapp-frontend-deployment namespace: myserver spec: replicas: 1 selector: matchLabels: #rs or deployment app: myserver-myapp-frontend-label #matchExpressions: # - {key: app, operator: In, values: [myserver-myapp-frontend,ng-rs-81]} template: metadata: labels: app: myserver-myapp-frontend-label spec: containers: - name: myserver-myapp-frontend-label image: nginx:1.20.2 ports: - containerPort: 80 #readinessProbe: #注释readinessProbe,启用livenessProbe livenessProbe: httpGet: #path: /monitor/monitor.html path: /index.html port: 80 initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3 --- apiVersion: v1 kind: Service metadata: name: myserver-myapp-frontend-service namespace: myserver spec: ports: - name: http port: 81 targetPort: 80 nodePort: 40012 protocol: TCP type: NodePort selector: app: myserver-myapp-frontend-label root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl apply -f 1-http-Probe.yaml deployment.apps/myserver-myapp-frontend-deployment created service/myserver-myapp-frontend-service created root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl get pod -n myserver NAME READY STATUS RESTARTS AGE myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 1/1 Running 0 5s root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl exec -it -n myserver myserver-myapp-frontend-deployment-59f5577fd6-z6gkb bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. root@myserver-myapp-frontend-deployment-59f5577fd6-z6gkb:/# root@myserver-myapp-frontend-deployment-59f5577fd6-z6gkb:/# cd /usr/share/nginx/html/ root@myserver-myapp-frontend-deployment-59f5577fd6-z6gkb:/usr/share/nginx/html# ls 50x.html index.html root@myserver-myapp-frontend-deployment-59f5577fd6-z6gkb:/usr/share/nginx/html# mv index.html /tmp/ #将文件移动到其他目录下,造成pod发生错误 root@myserver-myapp-frontend-deployment-59f5577fd6-z6gkb:/usr/share/nginx/html# command terminated with exit code 137 #没过3s就发现异常然后中断pod root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl get pod -n myserver -w #通过命令监测并为发现pod重启 NAME READY STATUS RESTARTS AGE myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 Pending 0 0s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 Pending 0 0s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 ContainerCreating 0 0s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 1/1 Running 0 2s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 1/1 Running 1 (0s ago) 55s root@easzlab-deploy:~# kubectl get ep -n myserver -w NAME ENDPOINTS AGE myserver-myapp-frontend-service 10.200.104.246:80 2s

root@easzlab-deploy:~# kubectl describe pod -n myserver myserver-myapp-frontend-deployment-59f5577fd6-z6gkb

Name: myserver-myapp-frontend-deployment-59f5577fd6-z6gkb

Namespace: myserver

Priority: 0

Node: 172.16.88.163/172.16.88.163

Start Time: Sun, 23 Oct 2022 09:26:51 +0800

Labels: app=myserver-myapp-frontend-label pod-template-hash=59f5577fd6 Annotations: <none> Status: Running IP: 10.200.104.246 IPs: IP: 10.200.104.246 Controlled By: ReplicaSet/myserver-myapp-frontend-deployment-59f5577fd6 Containers: myserver-myapp-frontend-label: Container ID: containerd://5eac324ae6d6917425d667bfab61609b986ffc6f87b9b571e609945af0f8d81e Image: nginx:1.20.2 Image ID: docker.io/library/nginx@sha256:38f8c1d9613f3f42e7969c3b1dd5c3277e635d4576713e6453c6193e66270a6d Port: 80/TCP Host Port: 0/TCP State: Running Started: Sun, 23 Oct 2022 09:27:46 +0800 Last State: Terminated Reason: Completed Exit Code: 0 Started: Sun, 23 Oct 2022 09:26:53 +0800 Finished: Sun, 23 Oct 2022 09:27:46 +0800 Ready: True Restart Count: 1 Liveness: http-get http://:80/index.html delay=5s timeout=5s period=3s #success=1 #failure=3 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-shjpn (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: kube-api-access-shjpn: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 3m43s default-scheduler Successfully assigned myserver/myserver-myapp-frontend-deployment-59f5577fd6-z6gkb to 172.16.88.163 Normal Pulled 2m48s (x2 over 3m41s) kubelet Container image "nginx:1.20.2" already present on machine Normal Created 2m48s (x2 over 3m41s) kubelet Created container myserver-myapp-frontend-label Normal Started 2m48s (x2 over 3m41s) kubelet Started container myserver-myapp-frontend-label Warning Unhealthy 2m48s (x12 over 2m54s) kubelet Liveness probe failed: HTTP probe failed with statuscode: 404 #通过http探针检测到异常 Normal Killing 2m48s (x3 over 2m54s) kubelet Container myserver-myapp-frontend-label failed liveness probe, will be restarted #提示将要重启pod

Normal Pulled 2m48s (x4 over 2m54s) kubelet Container image "nginx:1.20.2" already present on machine

Normal Created 2m48s (x4 over 3m02s) kubelet Created container myserver-myapp-frontend-label

Normal Started 2m48s (x4 over 3m02s) kubelet Started container myserver-myapp-frontend-label

root@easzlab-deploy:~#

2.2、关闭livenessProbe 存活探针,启用readinessProbe就绪探针

root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# vim 1-http-Probe.yaml root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# cat 1-http-Probe.yaml apiVersion: apps/v1 kind: Deployment metadata: name: myserver-myapp-frontend-deployment namespace: myserver spec: replicas: 1 selector: matchLabels: #rs or deployment app: myserver-myapp-frontend-label #matchExpressions: # - {key: app, operator: In, values: [myserver-myapp-frontend,ng-rs-81]} template: metadata: labels: app: myserver-myapp-frontend-label spec: containers: - name: myserver-myapp-frontend-label image: nginx:1.20.2 ports: - containerPort: 80 readinessProbe: #livenessProbe: #关闭存活探针,启动就绪探针 httpGet: #path: /monitor/monitor.html path: /index.html port: 80 initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3 --- apiVersion: v1 kind: Service metadata: name: myserver-myapp-frontend-service namespace: myserver spec: ports: - name: http port: 81 targetPort: 80 nodePort: 40012 protocol: TCP type: NodePort selector: app: myserver-myapp-frontend-label root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl apply -f 1-http-Probe.yaml #重新加载配置文件 deployment.apps/myserver-myapp-frontend-deployment configured service/myserver-myapp-frontend-service unchanged root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl get pod -n myserver

NAME READY STATUS RESTARTS AGE

myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 1/1 Running 0 3m40s

root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe#

验证错误:进入pod,移走html文件

root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl get pod -n myserver NAME READY STATUS RESTARTS AGE myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 1/1 Running 0 6m55s root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl exec -it -n myserver myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk bash kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. root@myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk:/# cd /usr/share/nginx/html/ root@myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk:/usr/share/nginx/html# ls 50x.html index.html root@myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk:/usr/share/nginx/html# mv index.html /tmp/ #此时并未立即终端退出 root@myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk:/usr/share/nginx/html# root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl get pod -n myserver -w NAME READY STATUS RESTARTS AGE myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 Pending 0 0s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 Pending 0 0s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 ContainerCreating 0 0s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 1/1 Running 0 2s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 1/1 Running 1 (0s ago) 55s myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 0/1 Pending 0 0s myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 0/1 Pending 0 0s myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 0/1 ContainerCreating 0 0s myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 0/1 Running 0 5s myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 1/1 Running 0 10s myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 1/1 Terminating 1 (12m ago) 13m myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 Terminating 1 13m myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 Terminating 1 13m myserver-myapp-frontend-deployment-59f5577fd6-z6gkb 0/1 Terminating 1 13m myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 0/1 Running 0 7m34s root@easzlab-deploy:~# kubectl get ep -n myserver -w NAME ENDPOINTS AGE myserver-myapp-frontend-service 10.200.105.171:80 19m myserver-myapp-frontend-service 20m #此时pod后端地址也缺失 root@easzlab-deploy:~# kubectl get pod -n myserver NAME READY STATUS RESTARTS AGE myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk 0/1 Running 0 8m5s #pod状态处于异常 root@easzlab-deploy:~# kubectl describe pod -n myserver myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk Name: myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk Namespace: myserver Priority: 0 Node: 172.16.88.164/172.16.88.164 Start Time: Sun, 23 Oct 2022 09:40:00 +0800 Labels: app=myserver-myapp-frontend-label pod-template-hash=5f47c9b9b6 Annotations: <none> Status: Running IP: 10.200.105.171 IPs: IP: 10.200.105.171 Controlled By: ReplicaSet/myserver-myapp-frontend-deployment-5f47c9b9b6 Containers: myserver-myapp-frontend-label: Container ID: containerd://8c0033fe8f614cebdf890b080cfe7c5a2cca763141842e7a0e16a1d0b1e36faf Image: nginx:1.20.2 Image ID: docker.io/library/nginx@sha256:38f8c1d9613f3f42e7969c3b1dd5c3277e635d4576713e6453c6193e66270a6d Port: 80/TCP Host Port: 0/TCP State: Running Started: Sun, 23 Oct 2022 09:40:04 +0800 Ready: False Restart Count: 0 Readiness: http-get http://:80/index.html delay=5s timeout=5s period=3s #success=1 #failure=3 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-58q55 (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: kube-api-access-58q55: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 8m11s default-scheduler Successfully assigned myserver/myserver-myapp-frontend-deployment-5f47c9b9b6-h88pk to 172.16.88.164 Normal Pulled 8m7s kubelet Container image "nginx:1.20.2" already present on machine Normal Created 8m7s kubelet Created container myserver-myapp-frontend-label Normal Started 8m7s kubelet Started container myserver-myapp-frontend-label Warning Unhealthy 1s (x16 over 43s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 404 #一直停留在异常状态下 root@easzlab-deploy:~#

总结:探针检测到pod发生异常,如果开启readinessProbe,并未开启livenessProbe,pod不会重启,一直处于异常状态;如果开启livenessProbe,并未开启readinessProbe,pod将会被自动重启。

2.3、综合案例使用

root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# cat 5-startupProbe-livenessProbe-readinessProbe.yaml apiVersion: apps/v1 kind: Deployment metadata: name: myserver-myapp-frontend-deployment namespace: myserver spec: replicas: 1 selector: matchLabels: #rs or deployment app: myserver-myapp-frontend-label #matchExpressions: # - {key: app, operator: In, values: [myserver-myapp-frontend,ng-rs-81]} template: metadata: labels: app: myserver-myapp-frontend-label spec: terminationGracePeriodSeconds: 60 containers: - name: myserver-myapp-frontend-label image: nginx:1.20.2 ports: - containerPort: 80 startupProbe: httpGet: path: /index.html port: 80 initialDelaySeconds: 5 #首次检测延迟5s failureThreshold: 3 #从成功转为失败的次数 periodSeconds: 3 #探测间隔周期 readinessProbe: httpGet: #path: /monitor/monitor.html path: /index.html port: 80 initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3 livenessProbe: httpGet: #path: /monitor/monitor.html path: /index.html port: 80 initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3 --- apiVersion: v1 kind: Service metadata: name: myserver-myapp-frontend-service namespace: myserver spec: ports: - name: http port: 81 targetPort: 80 nodePort: 40012 protocol: TCP type: NodePort selector: app: myserver-myapp-frontend-label root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl apply -f 5-startupProbe-livenessProbe-readinessProbe.yaml deployment.apps/myserver-myapp-frontend-deployment created service/myserver-myapp-frontend-service created root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl get pod -n myserver NAME READY STATUS RESTARTS AGE myserver-myapp-frontend-deployment-556f9595c5-kzxfp 0/1 Running 0 5s root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe# kubectl get pod -n myserver NAME READY STATUS RESTARTS AGE myserver-myapp-frontend-deployment-556f9595c5-kzxfp 1/1 Running 0 11s root@easzlab-deploy:~/jiege-k8s/20220807/20220806-探针yaml/case3-Probe#

原文地址:http://www.cnblogs.com/cyh00001/p/16581378.html